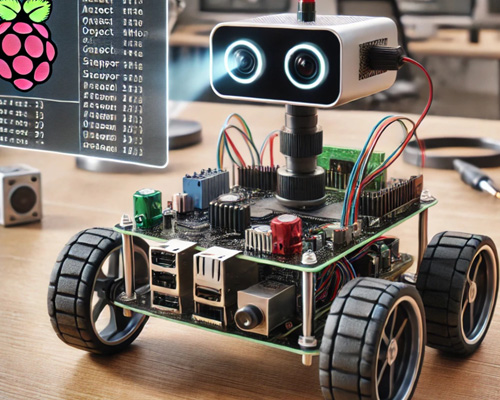

AI-Powered Object

Detection Robot

An autonomous robotic platform equipped with real-time computer vision and deep learning capabilities for intelligent object recognition and interaction.

An autonomous robotic platform equipped with real-time computer vision and deep learning capabilities for intelligent object recognition and interaction.

Multi-angle camera array with depth sensing and infrared capabilities

NVIDIA Jetson Nano running optimized neural network inference pipelines

6-axis servo system with PID controllers for precise robotic movement

Web-based control panel with live video feed and telemetry dashboard

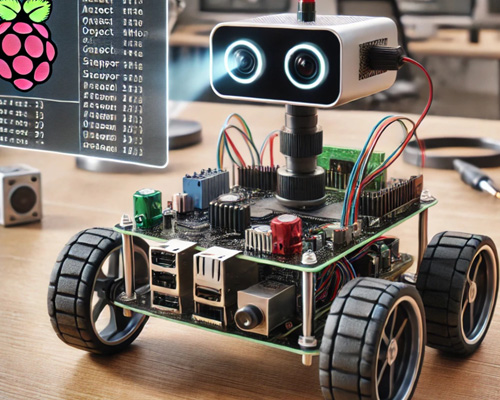

YOLOv5-based detection running at 30 FPS identifying 15+ object classes with bounding box visualization.

A* and RRT algorithms for autonomous navigation around obstacles with dynamic re-routing capabilities.

Gripper control with force feedback sensors for safe and precise pick-and-place operations.

On-device transfer learning allows the robot to recognize new objects with minimal training samples.

Stereoscopic vision combined with depth sensors for accurate 3D spatial mapping of the environment.

Low-latency WebRTC streaming for remote control with keyboard, gamepad, or mobile input support.

3D-printed chassis and component mounting designed in Fusion 360 with stress analysis simulation.

Camera calibration, image preprocessing, and YOLOv5 model training on custom dataset of 5,000 images.

Servo motor integration with inverse kinematics for smooth 6-DOF arm movement and locomotion.

ROS2 framework connecting vision, planning, and control nodes with real-time message passing.

Iterative testing in controlled environments with progressively complex navigation and detection scenarios.

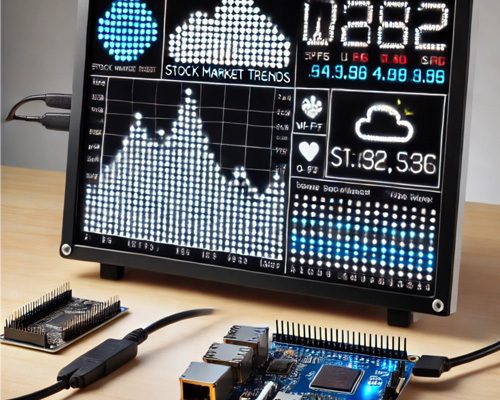

From autonomous navigation to computer vision, our team creates custom robotic solutions that push the boundaries of intelligent automation.